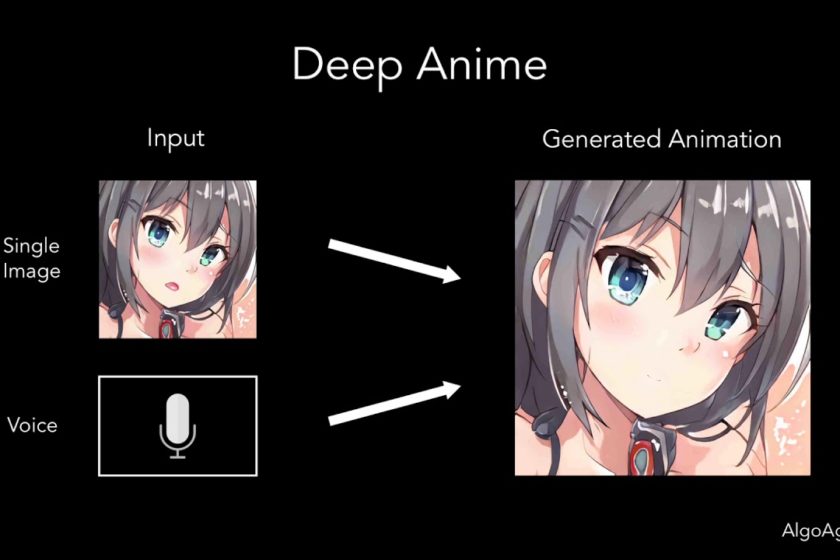

A deep-learning engine can automatically generate talking animation based on a single image and a voice recording. AlgoAge Co., Ltd in Minato, Tokyo debuted the DeepAnime artificial intelligence engine on August 6. The company’s press release states the engine creatures natural animation including mouth movement and blinking with only the two provided assets.

AlgoAge stated that the program can be used for entertainment purposes, like adding your voice to any image to voice the character to professional needs like cost-reduction for games with minimal animation.

The tech industry has continued to make strides to develop artificial intelligence programs to help with the anime production workload. Dwango’s Yuichi Yagi debuted an AI program that creates in-between animation in 2017. Dwango then announced the program was utilized for some parts of FLCL Progressive. Imagica Group and OLM Digital joined forces with the Nara Institute of Science and Technology (NAIST) to develop a technique for automatic coloring, further expanding AI options. Video game company NCSoft (Guild Wars 2) researchers Jun-Ho Kim, Minjae Kim, Hyeonwoo Kang, and Kwanghee Lee introduced a program that uses Generative Adversarial Networks (GANs) to transform real-life people into anime characters earlier this month.

The manga publisher Hakusensha has begun using PaintsChainer automatic coloring program for some of its online manga releases.

Source: PR Times